Data Collection

Data Collection is one of the most important aspect of this project. There are various sources of airfare data on the Web, which we could use to train our models. A multitude of consumer travel sites supply fare information for multiple routes, times, and airlines.

We tried various sources ranging from many APIs to scraping consumer travel websites. In this section we have discussed in detail on these various sources and the importance of parameters that are collected.

Data Sources

Historical air flight prices are not readily available on the internet. Therefore the only option that we have is to use some resources and collect data over a period of time. There are many APIs made available by companies like Amadeus, Sky Scanner. However, the number of flights they returned for a domestic route in India are limited.

Thus we had to explore APIs by Indian companies. GoIbibo & Expedia provide ready to use APIs which returns a set of variables of a certain route. However, when GoIbibo API was used to extract such flight prices, the returned set of parameters were in a complex form & the raw data had to be cleaned several times.

Therefore, we decided to build a web spider that extracts the required values from a website and stores it as a CSV file. We decided to scrape Makemytrip website using a manual spider made in Python.

Web Scraper (Python2.7)

urllib2 library was used to access the Makemytrip website and load the required page a json object. This object was parsed using inbuilt python functions and a csv database was obtained. dateutil.rrule library was used to obtain a set of dates between two dates on which the above functions can be applied to get the data for a range of dates. The whole script is open-sourced on Github.

What and When we collected?

What?

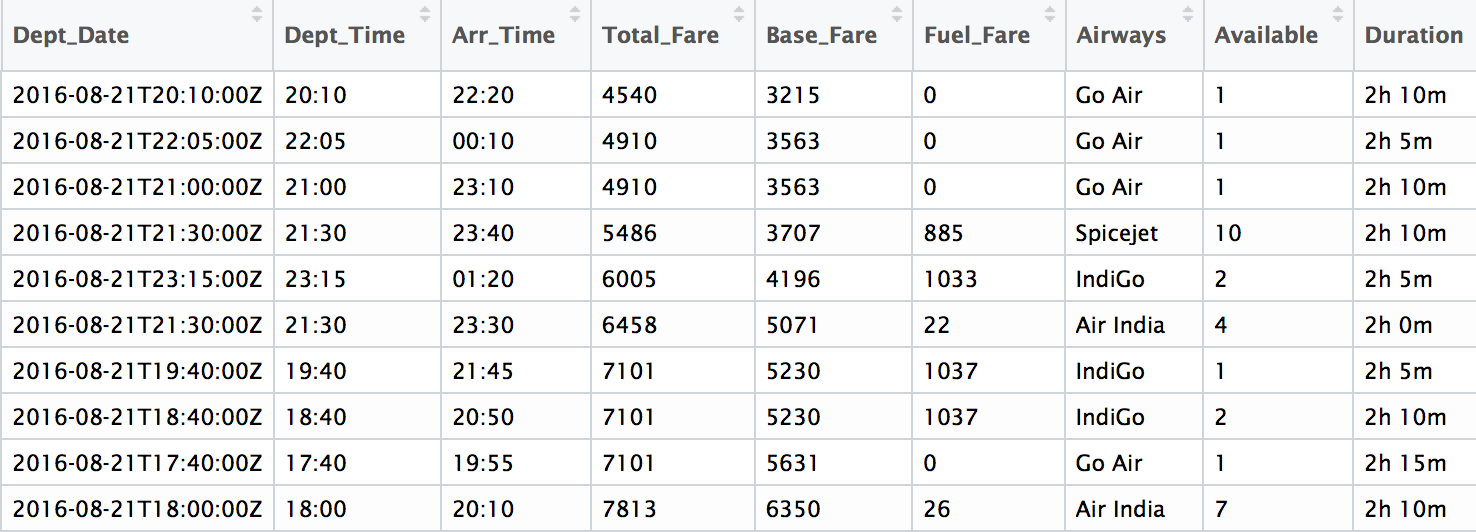

The basic structure of the script successfully extracts information from the Makemytrip website and outputs a csv data file. Now an important aspect is to decide the parameters that might be needed for the flight prediction algorithm.

Makemytrip returns numerous variables for each flight returned. However not all are required and thus we selected the following -

- Origin City

- Destination City

- Departure Date

- Departure Time

- Arrival Time

- Total Fare

- Airway Carrier

- Duration

- Class Type - Economy/Business

- Flight Number

- Hopping - Boolean

- Taken Date - date on which this data was collected

When?

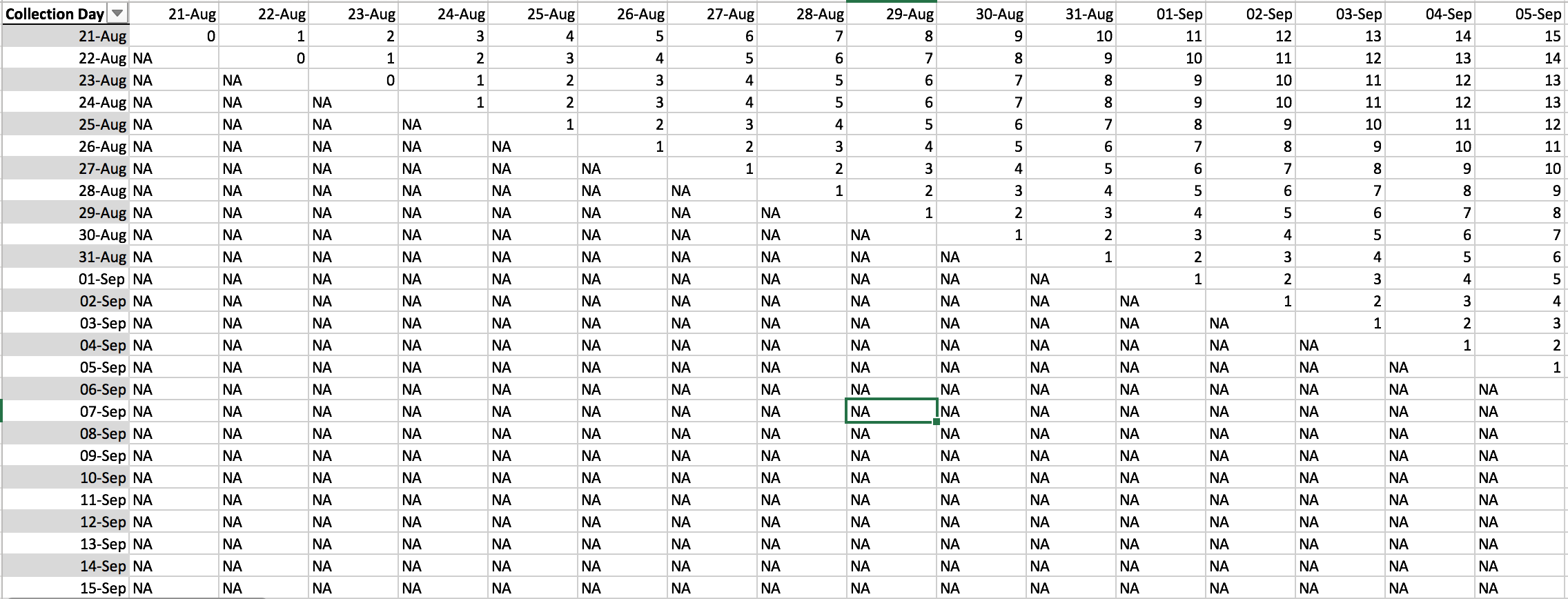

We collected prices for flights having departure date from 21st August 2016 to 21st November. This implies that we collected data with max 92 days to departure. Our model is restricted to predict a maximum of 45 days to departure and thus we collected data for maximum of 92 days. This made sure that we had enough data for 45 days of departure.

As you can observe the cascading effect of dates. Due to this, we have only one day's data of flights having a 92 days to departure. Therefore to restrict our model to 45 days to departure, we had to collevt data till 92 days to departure to get enough valid data.

Automating the Collection

The above script had to be run daily to get the required data. Manually running the file was senseless because there are many ways to automate the script. There are multiple cloud servers like Microsoft Azure or AWS EC2 which can be used to host the python script.

We used Microsoft Azure initially and the process of setting up a virtual server is quite easy. However, the free trial period was limited and thus we had to shift to our institute's local server. Linux system provides a beautiful option called Cronjobs. It is an in-built scheduling option that is very easy to use. You can read a detailed blog on automating python script here.